We exist in vast endless storms of continually shifting information, beyond what our biological senses can pick up and experience directly. We know this from things like sonar to ultrasound to infrared cameras to xrays to photos of deep space, to animals that pick up on bio-electricity or have swarm sensing capabilities via photoreceptors on their skin. (or, in the case of people here, magnets implanted!)

We can do this ourselves, picking up on more signals external beyond our view, via technology like the following:

(listen to that one with audio, to hear the device working - the visual is just for debug/sharing what it sees to others, the experience itself is more perceptual directly thanks to neuroscience fun)

This post is sort of trying to explain concepts from many different areas in a short read, but doing that requires knowing some terms:

Qualia are the subjective qualitative experiences of consciousness, like the greenness of green or the feel of how something just is something.

Umwelt is a concept from biology and philosophy that refers to the “self-world” or the “environment as experienced” by a particular organism. It describes the unique, subjective sensory world that an animal perceives based on its biological and sensory abilities. (which also ties into cognitive abilities too to some extent - emotions can shift perceptions, among other things).

Cognitive light cone: “Each individual, based on its sensory and effector apparatus, and the complexity and organization of information-processing unit layers between them, can measure and attempt to modify conditions within some distance of itself. The size and shape of this cognitive boundary defines the sophistication of the agent and determines the scale of its goal directedness.” (source )

All these have to do with the perceptual side of things, aside from the cognitive light cone, which is more generic and goal oriented more than just perceptual - how does our light cone change to accommodate new goals, or how does changing it help reach them, which is more cognitive side too, for enabling thinking in different routes or modifying the mind in specific ways to do certain things vs just enhancing/augmenting senses.

We have known since the 60s, and there has been great research into a topic called “Sensory Substitution”, which shows us just how adaptable our minds are, and malleable our perception of the world external our minds is, and how we can route in information about that external world through novel channels.

How data gets to the mind, doesn’t matter so much as relevancy and consistency of signal.

Sensory substitution largely deals with how to take one set of signals meant for one modality, and route it into another modality, by quantizing and converting the information using technology.

This has enabled deaf to hear, via turning audio into haptic vibrations on the skin, or the blind to “see” by turning visual information into auditory signals.

The science behind this adaptability of signals in, comes largely from how we already generate, form our qualia - our sense of spatial positioning, depth perception, is a culmination of various sensory signals in combining and abstracting in different ways to form knowledge of depth, locale. Either from bifocal vision, auditory ingest hearing where sounds are, or proprioception of movement through space, all those are used to build up the sense of locations around.

While all this is cool, the science extends further out, into sensory addition, and expansion - utilizing those same concepts but to feed in novel signals beyond what the biological would otherwise have access to - connecting drops in the vast storm of information and crafting, filtering them to form specific qualia aspects in the minds of individuals. If given new signals in, so long as consistent, reliable, meaningful, the mind will construct new qualia, a new umwelt, over the course of weeks to months, integrating at deeper and deeper levels the patterns from the technology side.

This has been shown to work by giving individuals depth sensor readings to gain spatial positioning knowledge within buildings, north sensing feedback, GPS guidance and more.

The realm of what is possible perspective, direct experience wise, is seemingly endless, if only people keep making new ways to perceive, experience these signals.

Sensory weaving

So, this has led to my hobby the past couple years. Using a term to cover all the substitution, expansion, and addition of senses, experiences - for what they are actually doing, converting information and threading in new experiences moment to moment.

I’ve been doing some experiments in various areas, and testing to enable some fun goals, experiments that might also be of interest to the fine folk here.

A device to modify the cognitive light cone, into many new, weird and perhaps useful shapes. How we perceive, shapes how we can even think. A blind invididual doesn’t “picture” something in their mind the way a sighted individual would. A deaf person doesn’t think in inner monologue (although even that is varied among people), vs in visuals, sign language.

Hence, the Sensory Weaver Mk2 - Lockpick (pick the doors of perception in the mind?)

A device that uses haptic feedback to transpile data form outside biological into an experience in the mind.

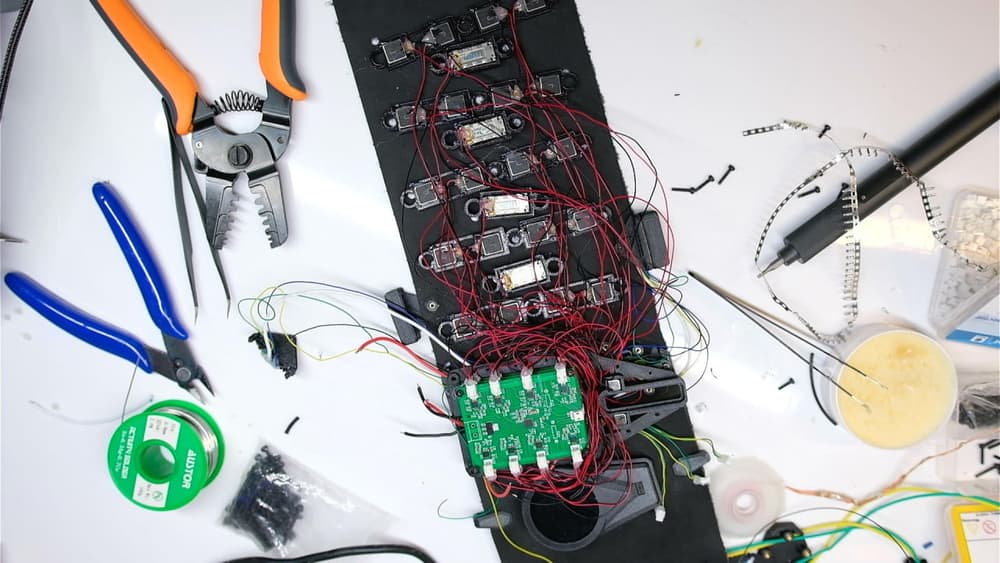

Current hardware is still very early days, even if much more capable than what it was. a 24 wideband LRA feedback device, in a wristband format. USB-C PD powered, by a 10,000mAh battery in my jacket pocket (way more than needed, only uses like 1.6a max draw with current sensors). Some off the shelf parts, some custom first attempt PCBs to drive the LRAs (they work, but not as intended for proper frequency control, working on new versions currently)

It has modular sensor slots, so it can be used to handle different sensory signals or combinations thereof - mix/match, use filtering, whatever - combining things makes even more new perspectives possible.

I’ve been using these sorts of things for about a year and half now consistently, just for personal research and exploration internal the mind a bit - first with a 8x8 distance sensor that allowed almost a sort of “whiskers” feeling of people passing by behind me, the outline of objects and more (it cones out, and was set to about 6’ of detection range, which gave about a 6’ grid of readings too).

Current wristband has a 8x8 thermal sensor augment in use for now, of similar view and reach as the whiskers one, and offers some of the similar shared aspects of qualia, while opening up more that I otherwise couldn’t have considered.

I know, people already have thermal sensing - but this enables perception beyond the biological - where having direct heat or cold perception may have limitations before damage, this can turn those signals into meaningful patterns, and offer greater discernment if wanted (not coded to do so because no need for me).

That, and what other qualia can come out of it - not just thermal, but similar to the whiskers module, it can alert and give experiential knowledge of spatial positioning of people, up to technically 23’ away in ideal conditions (but have had it pick up extremes over 100’ in super ideal settings).

It changes the sense of the world around, being able to remotely reach out, and touch and find, hone in on thermal signals around.

Those aren’t the only things tested, but the more daily ones used. Have other form factors (seat cushion for car…, ankle/shoe testing for nephropathy balance fixes, etc - wristband is just easier to wear all day for me)

Any questions, or comments are super appreciated - if something is not explained well, doesn’t make sense or whatever let me know and I’ll try to re-articulate or fill in gaps I missed in explaining things. ![]()

Curious what others here might have ideas wise for this sort of concept - what things to explore, experience.

Or have done themselves actually - I saw some were using embedded magnets to feed other data via induction? Super cool - especially with directionality potential - like having a laptop nub control thing for feedback to the self in a way (but also more cool)

If anyone wants to read a real deep rambling dive into the topic, or see some of the papers behind the concepts and the science side more, check out my post on jailbreaking cognition

This concept goes so far beyond 1:1 or even filtered sensory experiences from reality, vs more platonic forms type feedback, such as OBDII data or emotional states or otherwise. Abstracts that we craft that have no direct correlation signal wise in reality, can be experienced directly as a sense, injected into the cognitive side up through the consciousness to change the umwelt of an individual connecting the perspective internal to much more weird and wonderful things.

(mods, if this is too much personal link sharing let me know! Looking to share a cool project I documented in one place many things on, but can share elements of that here directly more, as needed, if removing external links is preferred)