Might be worth looking to the Chinese on this one, they are producing modified 3070 16GB and 2080Ti 22GB cards (by replacing the 8Gbit stock VRAM ICs with 16Gbit ones and changing the strap resistor configuration to accept the swap) which might be in your budget (last I saw, <$400 but they might’ve gone up in price in this market)

The R720 will throw a max of 225 watts then. And the 3070 pulls 220 watts. I found posts of people claiming full load ratings of 225 even. I think that’s believable, tolerances vary and all.

(Note, 2080ti is 250 watts, so 25 watts over max)

I found videos and posts of people hand modding 3070s for 16gb vram. I also found a few news stories and such for chinese firms producing unsanctioned 16gb models. But I can’t actually find anything directly for sale. Alibaba is hot garbage to search.

I like it being greymarket. This appeals to me.

But… Being difficult to actually acquire, the possibility of craptastic quality control, and sucking every last watt with no leftover overhead, I think I’m gonna pass on this one.

Excellent thought, though!

You can limit NVIDIA GPU power consumption using the NVIDIA Control Panel or the nvidia-smi command-line tool, allowing you to adjust the power limit to a desired value for better efficiency or lower temperatures.

Here’s a breakdown of how to do it:

- Using the NVIDIA Control Panel:

- Access the Control Panel: Right-click on your desktop and select “NVIDIA Control Panel”.

- Manage 3D Settings: Navigate to “Manage 3D settings” in the left-hand menu.

- Power Management Mode: Locate the “Power management mode” dropdown and select “Prefer Maximum Performance” or “Normal” depending on your needs.

- Apply Changes: Click “Apply” to save your settings.

- Using the

nvidia-smicommand-line tool (Linux):

- Check Current Power Limits:

Use the command $ nvidia-smi -q -d POWER to see your current power limits.

- Set New Power Limit:

Use the command $ sudo nvidia-smi -pl <wattage> to set a new power limit (e.g., $ sudo nvidia-smi -pl 200 to set it to 200W).

- Confirm Settings:

Use the command $ nvidia-smi -q -d POWER again to verify the new power limit.

- Make changes persistent:

You can create a custom systemd service script to make the power limit setting persist across reboots.

2080ti with 22gb

And Blower Style

And And end of card power plug.

579.99 +20.00 shipping+tax

If I had the cash…

I just checked and these are still ~$360 before shipping/tax if you just buy them straight from China.

I do have a Leadtek blower one with end power connectors that I’d definitely be willing to part with, but I’m not certain it actually works so let me test that first and report back lol.

Personally I don’t think the end of card power connector is a good idea… especially if the card is anywhere near the max length the case can accommodate. The top of card power connector fits fine in the right side riser card slot.

Admittedly I’ve never messed with home assistant so it might not be possible there, but an easy solution I’ve implemented in some of my own personal projects is just streaming the AI’s responses and splitting it up by sentence. That way you can just send each individual sentence to be processed by the tts model as they’re generated and don’t have to wait for the full output.

Interesting… I don’t know if that will work because of the way the API call works at the transport layer. A call is made from the home assistant system to ollama, and while that connection is open the AI system generates its response and returns it. I don’t think the text to voice mechanism is even called until that responses received and the connection is closed.

For this to be possible, both sides would need to support some sort of streaming protocol that would maintain the connection but chunk the responses such that the text to speech system would be able to receive at least one chunk or sentence and start its job as additional chunks streaming, until the call is complete and the connection is closed.

How did you break up the stream? Did you put a proxy in the middle?

Again, no experience with home assistant specifically so good chance this won’t be applicable at all.

I didn’t need to proxy anything. The ollama API streams responses by default. I just concat the chunks until a full sentence has been received, then throw it into a queue to be processed and played by the tts model. Queueing the audio generation means that your tts system doesn’t need to support input streaming.

This is the bit of code I have to split out the sentences. Just a simple regex. I call it when I receive a chunk from ollama.

Code

// Split into sentences so that data can be processed before stream is finished

currentSentence[data.id] += data.chunk;

if (RegExp(/(\.{1,3}|\?|!)/gm).test(currentSentence[data.id])) {

// Send full sentence for TTS processing (also remove emojis)

speechThreads[data.id].enqueueTts(currentSentence[data.id].replaceAll(/\p{Emoji}/ug, '').trim(), null, data.user);

// Reset for new sentence

currentSentence[data.id] = "";

}

I took a very quick look through the code for the home assistant ollama component and it seems like they’re explicitly telling ollama to stream the responses already. It seems like it would be a relatively simple change to just capture that response as it’s being streamed if you’re so inclined.

What if it is talking while you are talking? Are you inputting text and getting speech back, or do you have to wait for the generation to finish before you talk? If so, how do you know if the generation is finished?

I recommend KoboldCpp over Ollama. They are both llamacpp forks with different philosophies. Koboldcpp is completely dedicated to open source and privacy while Ollama is simplicity at the expense of everything else.

Once you get beyond the basic ‘I want to run an AI’ Ollama is extremely limited. Also, their development doesn’t give back to upsteam: they rely on llama.cpp but add model support to it without making a compatible release back to llama.cpp so they can add their own support.

This smacks heavily of ‘embrace extend extinguish’ tactics used in the past by MS to destroy their competitors. Rumor is that ollama is working on their own incompatible backend to break away from llamacpp and take over the local AI ecosystem with a closed solution, but who knows what their motivation is. I also can’t stand that they rely on docker as an installer.

I’ve tried a couple different ways of handling talking over the AI. The method I eventually settled on was waiting for the user to stop talking, then sending the user’s audio over to the whisper model and getting the text as normal. That allows me to determine if it’s worth stopping the AI’s response early. For example if the user just gave an acknowledgement like “uh huh”, “ok”, etc. I don’t really want to interrupt generation, but if they asked a question it would probably be worth answering it immediately. It’s easy to determine if a message is worth keeping by using basic sentiment analysis, another lightweight model, or even just a whitelist of allowed phrases.

I’ve tried other methods such as stopping generation as soon as it detects speech, but that ended up being more annoying than anything. I feel like this way gives a good balance, and also lets you do other things like checking if the user was even trying to talk to the AI at all or was just talking to someone else in the room.

From a code perspective, ollama has the “done” field in every object of the stream. Just wait for it to be true and you can do whatever from there.

API docs.

I tried it a few months ago. It’s alright but I ended up preferring ollama. I’ll probably give it another shot when I have a reason to.

I don’t think they do? You can run them in docker but unless I’m missing something you definitely don’t have to, nor is it even the default I think?

Long story short, I bought two of the same cables that Amal modded to fit a 3060 into his R720, and I’m not gonna use them.

Free to good home. Two conditions.

- Actually have a server. I’d rather these not go to a “someday” project.

- If you’re only gonna need one, lemme know and maybe we help two people out.

Otherwise, just share the love people!

In the last couple of days on the R720:

- Installed the 1100w power supplies (no biggie)

- Updated Win 10 to Win 11 (fun fact, you have to use an INTERNAL usb port, located in a crevice near the power supply)

- Endured a multi day thunderstorm that eventually fried my Modem. (I’m hotspotting the phone now)

- Discovered I have an internal error code. “CPU 1 has an internal error (CPU IERR)”

I think it’s probably from having the power flicker, the case open while running, or from being upgraded. But I can’t figure out how to access, check, and clear it.

According to the internet, I should be able to access the System Event Log via IDRAC. So I went to restart, got in via the F2 key, and found the IP address 192.168.0.10

Restarted the machine, went into Firefox and tried to navigate to 192.168.0.10 and/or http://192.168.0.10/ neither of which went anywhere.

I’m probably missing something ridiculously simple, but anybody know where I’m going wrong?

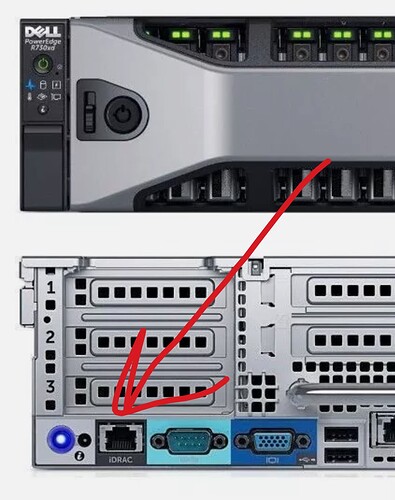

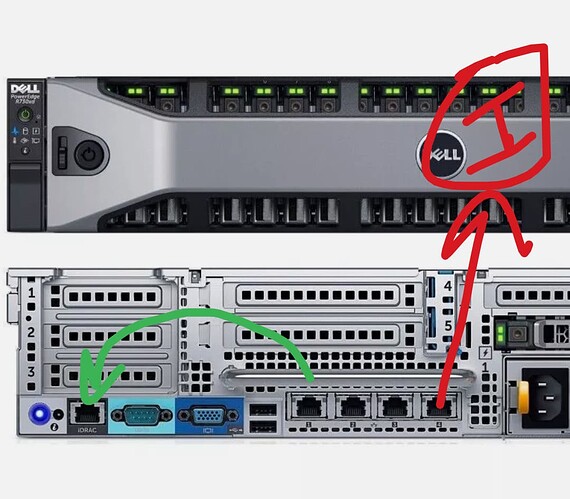

Did you plug in the iDRAC card NIC port? It’s all off by it’s lonesome…

Also the standard questions about your networking apply… did you add the 192.168.0.0/24 subnet to your computer NIC so you can talk to that IP address? Set your NIC IP to 192.168.0.5 or whatever.

Also set up a ping in a cmd prompt box so you can see if you’re even talking on the network…

ping 192.168.0.10 -t

Oh if you are on the same machine you can do something else like connect one of the quad port NICs to the Internet and probably get a subnet like 192.168.1.x/24 and manually set up another NIC for 192.168.0.5 and plug directly into the iDRAC…

You might need to check the BIOS though because it is possible to set the iDRAC to use one of the quad port NICs instead, and you don’t want that.

You know, sometimes I’m just able to sit back and marvel at how little I know. It’s actually a good thing, cause that’s the first step to actually learning something.

I think I’m gonna learn alot now. Thanks for the direction, I’m a gonna think about it for a bit and see what I can make of it.

I should really take some IT / Networking classes. Would be good for personal development.

I replaced my modem / router, so I’m back in action.

I logged into the modem, and I can see my PC, but no IDRAC. I moved the ethernet cable from the IDRAC port to one of the NIC ports, and now it shows up as the server name. Decided to reset the IDRAC from static to dynamic IP, and move the ethernet back to the IDRAC port, and suddenly it shows up where I can see it, GREAT!

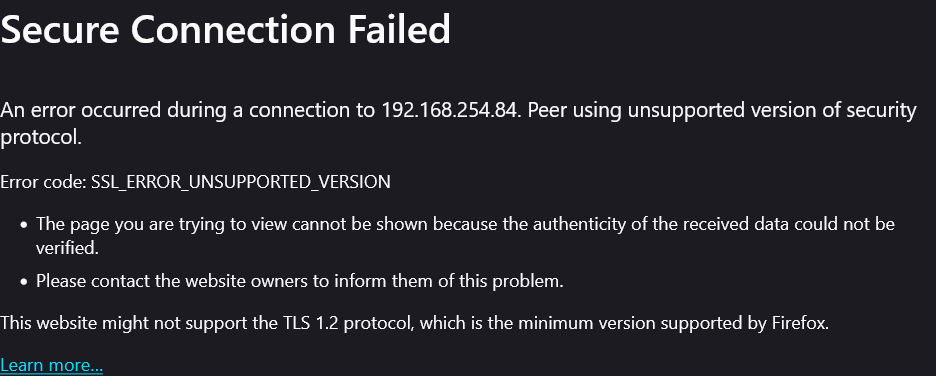

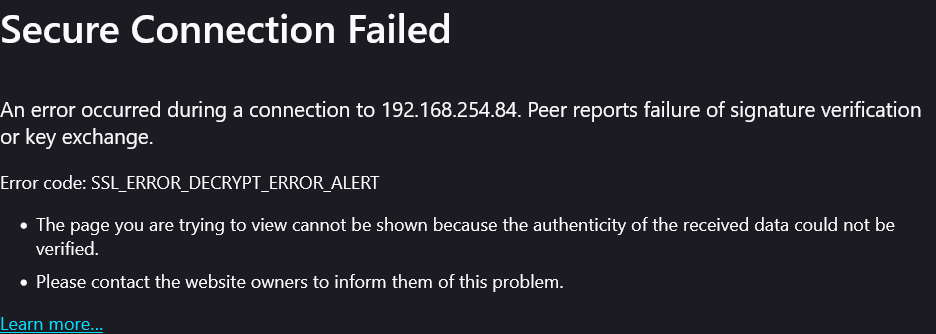

But, when I try to navigate to the IP address, I get:

Per Google, this is because the version of firefox I’m using doesn’t support the old security certs that the IDRAC is using.

I tried going into about:config and changing security.tls.version.min from 3 to 1, but that doesn’t seem to help much. It does slightly change the error message to:

What’s my best option here?

- “fix” firefox by modifying the about:config (if so what entry / values)

- Update the IDRAC somehow?

- Find and install a really old web browser?

Sorry for the vague ish questions, but google isn’t providing much clarity. Especially as the more authoritative sources presume that anyone working on such an issue would have a better grasp of networking than I do.

Fix Firefox to work, then try updating the iDRAC… I got Firefox working but don’t recall the steps j had to jump through. It was more than one setting if I recall. Consider asking chatGPT?