Absolutely… this will be great.

I’m at the just poking buttons stage.

I was able to get to the IDRAC access page by going into Firefox about:config and making the following changes

- security.tls.version.min from 3 to 1

- security.tls.version.max from 4 to 1

- security.tls.version.fallback-limit from 4 to 1

The last two would not let me change the values unless I took an intermediate step of first setting it to a garbage value like 15153 or 46435, then set it to 1. Also note, this completely boogers any and all websites. You have to change it back when done. There might be a cleaner method, but this worked.

Next, I couldn’t get through the User Password. I was trying root and calvin. When that didn’t work I tried the generic user name and password that the previous owner had on Windows. No luck.

Tried to reset the password by holding down the lifecycle button for 15 seconds per google. No Joy, did not give expected response either (fans cycling).

Next attempt, went into system via F2 on reboot and tried the same thing, got stuck at 1%. Would not progress.

Third attempt, went into Lifecycle controller via F10 on reboot and performed factory reset of IDRAC.

Now I cannot access via the IP address. (It does not show up in router again).

On the bright side, I appear to have cleared out whatever error it was reporting. I still want in.

Alright so, my NAS (i5 4670k 4c4t, 16gbDDr3) also serves as a little VM host for small temorary junk. It decided to shit itself and clear out the UEFI. Fixable, sure, but:

I have my r5 3600 6c12t, an old mobo, and 24gb of DDr4 (and the mobo will take ECC memory just fine). I will get an AM4 cooler in the mail today.

I have a quadro k2200 with 4gb of vram sitting on a shelf rotting.

I’m going to make the wild guess that messing around with image generation will not go well if I try to do that on a k2200 for funsies? Never messed with AI.

Aw heck yeah, finally a use for this old card.

Otherwise I don’t have much use for it.

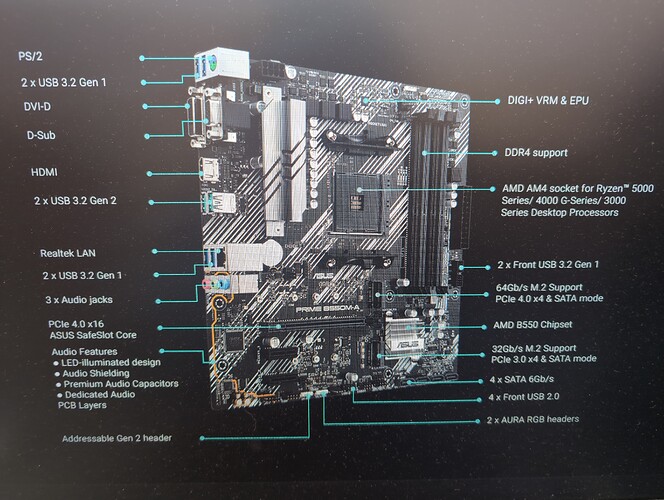

Any chance that MOBO has two PCIe slots? You might be able to franken-garbage a little higher?

Asus PRIME B550M-A

One of the slots will be taken up by my 2.5gbe NIC

I believe I will also have to throw in my pcie > 2x sata3 card in the other other slot… Maybe.

I’m tempted (for funsies) to set up my 16gb NVMe pcie3.0x2 optane drive as a swap-drive or a cache drive in zfs

I was wrong, you only have to use the enter button. ![]()

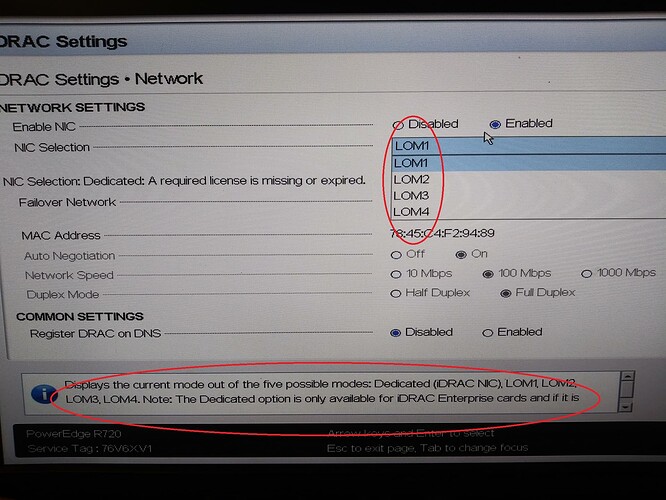

Somehow, I’ve borked the enterprise license for the IDRAC when I reset it. Or maybe I’m just mistaken and I actually used the NIC ports last time and just didn’t realize it. Anywho, that means I can’t use the dedicated IDRAC port, I have to use the standard NIC ports.

Anywho, I’m in now.

Major effort, minimum rewards. ![]()

hmm really?

- Press

F2to enter System Setup - Navigate to iDRAC Settings

- Select Network

- Under NIC Selection, choose Dedicated (instead of Shared or LOM1/2/3/4)

- Configure for static IP

- Save settings and exit

- Reboot

Does that not work for you? Also…

-

If you’re unsure of the IP address and need to reset iDRAC to defaults, press and hold the i button on the front panel for ~30 seconds.

-

Dell provides a Lifecycle Controller (F10 during boot) that also allows iDRAC configuration under Hardware Settings.

The thing is, I think that I used the dedicated port before I factory reset the IDRAC to reset the password, but I’m not 100% sure. So either I did, and I need to reinstall the license or I never had it.

If I did have it, then I’ll have to acquire another copy to reinstall I believe. Given that that’s probably $$$ and it’s only a mild convenience to me, I’ll probably skip.

Hmm… I think enable it, then save, possibly reboot, then come back and it should be automatically selected.

As for the license… possibly… though I seem to recall that the license file only enables certain “OpenManage” enterprise features… the iDRAC card itself and all its normal features should work.

Welp, I crashed and burned. Hard.

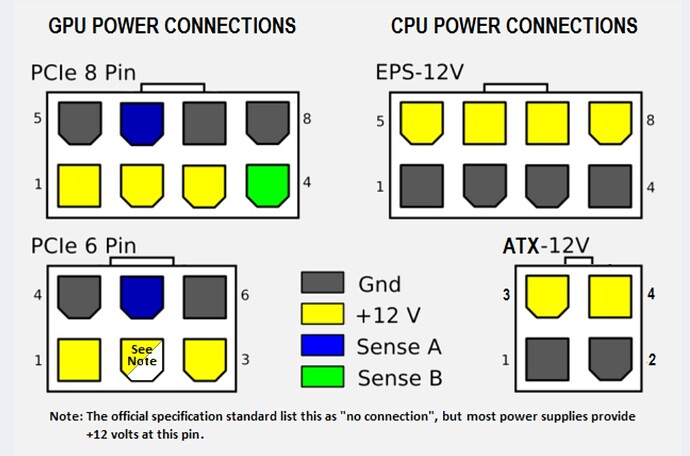

I made a set of cables and installed the GPU. Will not start.

Took it back apart and discovered I’d made an error. The DELL plug has 2 major differences from the EPS-12v plug, First, the jumper for the sense pin (I did that correctly), and then for some reason Dell decided to completely swap position on all the pos and ground wires (this is where I FUBARed). I made mine like the EPS12v, not the DELL pattern.

That’s a dead short.

I dissasembled my cables and rewired correctly.

Server will not start.

I removed the cables and GPU.

Server will not start.

I unplugged the server, pulled the Power supplies, yanked the CMOS battery, and held the power button for 30 seconds, then reinstalled everything with the original (750w) power supplies.

Server will not start.

When first plugged in, the blue led near the power button comes on, the power supplies illuminate, and I can briefly hear a light fan noise. Pushing the button extinguishes the blue light, and then 5-10 seconds later, I get 2 error messages on the LCD screen VLT0204, which is a main board voltage error.

Besides replacing the motherboard, anything else I should try?

Oh man… this is exactly what happened to me… but for whatever reason my power supplies sensed it and did not supply full power to the motherboard, instead erroring out. This is where I started to realize how to fix it;

I was super careful not to do it too. I just made my boo boo on the other end, because I made cables from scratch instead of repurposing the DELL style cables like you had.

My 2080ti draws too much power for a single cable. I was originally going to throttle it via the driver software, but…

I realized the other riser that I wasn’t using had a connector too. So I put one cable from riser 2 and one from riser 3 to get full power. (2x 150W)

So when I shorted out, I did it at double board frying short power.

Frack me.

I feel like i remember an Idrac setting that you need to flip when it senses a short.

Holy shit I ran into this at work like a year and a half ago (cause was unknown, no GPU in system).

Try taking the sever down to the minimum hardware config, meaning just CPU1, one DIMM in A1, and try one PSU at a time.

This cleared it for me (also reset the UEFI in the process, so I’d do that too)

Probably a slim chance, but a chance nonetheless.

(Also this was an r820)

I looked, idrac works fine BTW. All I can find that’s relevant is the system log messages showing “The system board fail safe voltage is outside of range” and " The system board 3.3V PG voltage is outside of range". Per google, both point to motherboard issues.

On it. By “On it” I mean I’m going to go take a walk to cool down and relax, then I’m field stripping that MF.

Will report.

I had a nice relaxing cool down involving 2 scoops of triple chunk chocolate in a chocolate waffle cone. Thats (2x3C)+1C = 7 units of chocolate.

And… It’s still dead.

There’s an 85 dollar bare motherboard on ebay.

I could also buy an incomplete server. People always want to yank the hard drives like they’re protecting state secrets from the NSA. Seriously, I’m 100% gonna wipe your porn collection and reinstall the OS. Quit freaking out. Anyways, That could mean I end up with some spare parts, and if I find one with the right type of RAM, I could (uselessly) pad my RAM count.

I might have a lead locally. Kinda unlikely, but I’ll check that out first, then go bargain hunting on ye ol Ebay.

Edit: Local source not possible in immediate future. Ebay = Done it. New board and thermal paste on the way. The old stuff was way too “not liquidy” for my liking.

Now I just have to wait.

Again.

So I’ve stumbled onto a thing regarding the GPU power supply capabilities. FOR THE R720 ONLY.

The PCIe slot has 75w, and it’s been understood that the GPU power plug / cable is limited to 150w, as that’s the rated limit of the PCIe 8 pin connector. (I guess that’s where it came from) Giving an assumed limit of 225w.

I found references to the Server supporting two 300w GPUs.

HERE You can also find silkscreening on the riser 2 card stating the plug to be 225w, which with the additional 75w from the PCIe slot, would support the 300w claim. Note, there are two different riser 3 cards. The one with a single x16 slot also shows 225w, but the dual x8 card shows 150w, which I assume is due to 75w being reserved for the second PCIe slot.

This was apparently accomplished by DELL with a special cable from the server which Ys into two 8 pin connectors.

Keep in mind that that cable only supports the propietary plugs on p40 and similar enterprise GPUs. But, an adapter cable such as what either I or Amal have done, could be run into a PCIe splitter so that each riser supports a gpu running dual plugs up to 300w total.

OK, so theoretically I could run dual 2080ti cards (250w), with upgraded memory, for a total of 44gb of vram, on an otherwise stockish system. I could also link both cards with the NVlink cable allowing them to directly share resources. (Note, case is tight to card there, might not physically fit, no way to verify)

At max load I’d be pulling 500w from the GPUs alone. Pretty sure the 1100w power supplies would be mandatory. Heat should be manageable as long as you weren’t running full blast all day, every day. (I think).

Am I going to try it? Nope.

I don’t have that much of a use case for that level of processing. Plus I’m broke, and GPUs cost. Still, it’s fun to think and plan just how far I could push it.

That is all.