So I’ve been working with various home automation solutions to make my home run more efficiently and control things in intelligent ways. I run both Google Home and Amazon Alexa systems, and I find I need to put both in each room because one is good at certain things and the other is good at other things.

For example, Google Home allows the creation of rooms and assigning devices to rooms. Amazon Alexa does not. This allows me to assign a Google Home device to a room and have lights and other devices assigned to that room, and then I can address those devices in a much more simple way. I can tell Google “hey Google turn off the lights” and it will turn off any light in the same room as the Home device you’re speaking to. Alexa basically shits the bed if you try to do that with an Echo device.

Alexa echo hardware is just better at hearing you speak. The microphone and voice processing is just better. Alexa will also whisper back at you if you whisper to it, which is kind of a critical feature if you’re trying to get Alexa to do something in the middle of the night and don’t want “OK I HAVE DONE THE THING YOU ASKED FOR. BY THE WAY YOU CAN ORDER MORE DISHSOAP OR SCHEDULE YOUR PET’S EUTHANASIA BY SIMPLY SAYING ADD DISHSOAP TO MY SHOPPING CART OR KILL MY PET PLEASE.” bellowing out at volume 8 and waking up the whole house.

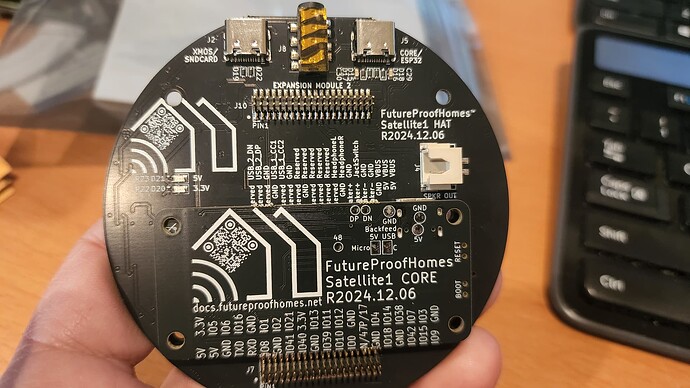

That said, Home Assistant has come out with it’s own voice hardware;

This is a preview edition and I bought 5. It has a speaker output which I highly recommend using with a proper speaker unless you don’t mind a tinny terrible hardly hearable speaker. Audio limitations aside, it’s a self-contained hardware device that puts me on the path to going totally local for home automation. I will say this though.. without a local AI conversational agent, the system is very very dumb and borderline useless.

Setting up ollama itself in a docker container that used my two GPUs was not super easy, but it was up and running in a few hours. Adding the ollama integration to home assistant was dead simple. Getting wyoming-whisper set up as a separate service in a docker container so it could also use my GPUs was easy as well. What has not been easy is figuring out how to improve the text to speech engine speed. It’s currently the primary issue I’m having with speed of reply.

What I find super interesting about having an AI connected up to a voice assistant is just how NLP is able to change behavior through simply telling it to. This is how chatGPT works yes, but after diddling with config files and looking for ways to change code to use updated libraries and updating packages to make things work, being able to just tell the AI to change how it output data and it doing it was a breath of fresh air. Case in point; the time.

When I first connected everything together, my first voice query was simple - what time is it? The output (after several seconds of waiting) was “The current time is thirteen thirty three.” .. this is obviously because the convo agent output “The current time is 13:33.” and the text to speech engine is not intelligent, it’s just convering text to speech verbatim. So, under the Home Assistant voice agent instructions, there are some basic AI agent staging instructions;

You are a voice assistant for Home Assistant.

Answer questions about the world truthfully.

Answer in plain text. Keep it simple and to the point.

I added one line to it;

Your text output is read by a text to speech processor which is not intelligent, so you will need to change numerical data like times and dates from numbers to spelled out words. For example, a current time of 13:33 should be changed to “one thirty three pm”.

After hitting save, I asked the voice assistant what time it was, and it replied “The current time is one thirty five pm”.

This is like all the 1980s and 1990s computer / AI interactions in a nutshell.. the computer has a voice interface with pretty good NLP but it’s still a computer and needs instructions to clarify and tweak responses. I’m pretty sure I could have just instructed the model to output data properly through the voice assistant, but the context window would expire and it would not last. Still, this is pretty amazing to me, even after having used chatGPT for so long now.. somehow running it locally and basically just talking to it to change behavior .. that’s amazing.